The best arguments I have are the one's that I lose.

Let me explain a little.

I like to think that I have a pretty good handle on Critical Thinking and debate skills. That being true, if I lose an argument then it's probably because I was wrong about something. That's a good thing. Before the argument, I believed something incorrect to be true. Now I am corrected. I can discard that incorrect knowledge and accept this newfound knowledge.

And of course, this is how it should be: When provided with better data, we should modify our position - even changing our opinion completely if required.

It's not a sign of weakness to change your mind on something. In fact, when I do see people change their mind after losing an argument or being presented with credible contrary information; I greatly respect the person who does that.

What I don't have respect for is when people double down on their beliefs and refuse to acknowledge defeat in an argument.

When two people of differing opinions argue honestly, it is a thing of beauty. There's an exchange of ideas, there's a sharpening of the saw - even if neither protagonist changes their POV completely.

The friendships I have and the best conversations I have are with people with whom I disagree with the most. Conversations with people who agree with me are somewhat lacking in that respect.

What is Critical Thinking?

Critical thinking can be seen as having two components:- a set of information and belief generating and processing skills, and

- the habit, based on intellectual commitment, of using those skills to guide behavior.

- the mere acquisition and retention of information alone, because it involves a particular way in which information is sought and treated;

- the mere possession of a set of skills, because it involves the continual use of them; and

- the mere use of those skills ("as an exercise") without acceptance of their results.

In order to understand what a fallacy is, one must understand what an argument is. Very briefly, an argument consists of one or more premises and one conclusion. A premise is a statement (a sentence that is either true or false) that is offered in support of the claim being made, which is the conclusion (which is also a sentence that is either true or false).

There are two main types of arguments: deductive and inductive. A deductive argument is an argument such that the premises provide complete support for the conclusion. An inductive argument is an argument such that the premises provide some degree of support for the conclusion. If the premises actually provide the required degree of support for the conclusion, then the argument is a good one. A good deductive argument is known as a valid argument and is such that if all its premises are true, then its conclusion must be true. If all the argument is valid and actually has all true premises, then it is known as a sound argument. If it is invalid or has one or more false premises, it will be unsound. A good inductive argument is known as a strong (or "cogent") inductive argument. It is such that if the premises are true, the conclusion is likely to be true.

How to find out if you suck at Critical Thinking

Quite easily. Just ask yourself the following questions:What is the difference between a fact and an inference?

Do I know the difference between evidence and supposition?

Do I know what a syllogistic fallacy is?

Can I engage in a socratic argument?

Do I know what tu quoque means?

Do I know what post hoc ergo propter hoc means?

Do I know what non-sequitur means?

Other than a place to consume alcohol, what is a 'licenced premise'?

If you struggle with any or all of the above, then you may suck at Critical Thinking. The good news is there is a cure!

The following websites provide a great framework for learning about CT. I highly recommend them - particularly the tutorial from austhink.

Fallacy Files - http://www.fallacyfiles.org/

Critical Thinking On The Web - http://austhink.com/critical/

Defining Critical Thinking - The Critical Thinking Community - http://www.criticalthinking.org/aboutCT/define_critical_thinking.cfm

Laird Wilcox on Extremist Traits - http://www.lairdwilcox.com/news/hoaxerproject.html

Basic Logic Terminology - https://philosophy.tamucc.edu/notes/basic-logic-terminology

Tired Arguments

Occasionally I encounter people who challenge the merits of CT, logic, rationality etc. So I summarise the most common of these here:"I know all about Critical Thinking"

You do? That's great! You won't mind then if I insist you don't use logical fallacies and point out the flaws in your argument. You also won't have a problem keeping it logical.You see, if I make a comment on your lack of CT skills, it's usually because your argument isn't logical, has multiple fallacies and doesn't follow even the most basic rules for an inductive or deductive argument. So, if you DO have the skills - then why aren't you using them?

"I've done my research"

No, you haven't. Not even remotely close.Research is conducted by experts in a field of study at the peak of their career. Research is defined as a careful and detailed study into a specific problem, concern, or issue using the scientific method.Research adds to and extends the existing body of knowledge.

As part of my thesis project at Uni, I had to conduct a Literature Review. This involved finding all the prior research work in the topic I was working on (which incidentally was titled "Microprocessor controlled soft-starting of an Induction Motor"). Reading all of that material (which was substantial) and writing a short report on it for my thesis supervisor. As part of the process, I had to keep a ridiculously detailed log of my activities detailing which articles I had read, what publications I had checked out of the library. Everything I did was logged in my thesis log in minute detail, whether I used it or not.

|

| Extract from my thesis log |

Yet, this was not considered to be research.

On top of that work, I spent the rest of the year - about 36 weeks, producing a solution to my problem involving five separate conceptual components, computer simulation, a working prototype, an 80 page thesis, a thesis presentation and a workshop demonstration.

Yet still, this was not research.

If I had continued on with my studies and eventually done novel work and pushed out the boundaries of science and engineering beyond where it was, then THAT would be considered research.

So, your 20 minutes of googling is not "research".

"You think you know everything"

As

a matter of fact, you're the one who's acting like they

know everything.

Even at times when I'm absolutely sure I'm correct, I remain open-minded to the possibility that there might have

been loopholes I hadn’t considered before or that maybe you know something else about the topic that I don’t. I even keep in mind that

there have been some times where I had jumped to conclusions too soon,

and because of that I'm even more cautious about not being too

closed-minded.

But no, by that statement, you are nowhere

near as open-minded to the possibility of your own views being wrong as

I am.

I’m

guessing that when you feel that you can write someone off as a know-it-all, it just makes you feel better about dismissing them when

they disagree with you about anything.

Very few people like being told

that they’re wrong, but rather than engage with that possibility, you feel I should just pander to your ignorance. Apparently, it’s disrespectful to

correct you when your wrong because the only way I could possibly know more than you is if I'm the smartest person in the world. Hence, by that logic, it's YOU that thinks they know everything. Otherwise, you'd be open to the possibility that you just possibly could be wrong.

"I'm entitled to my own opinion"

No, you're not. You're only entitled to the opinions you can defend.Put slightly better: You're entitled to your own opinions. You're not entitled to your own facts.

This concept is excellently covered here.

The TLDR is that if by opinion you mean "Which colour is best" or "Which food is nicest" then you are absolutely valid. But if you're talking about "Did men walk on the moon" then you aren't. One type of opinion is subjective, the other is based upon layers of facts that lead to an inescapable conclusion and is either correct or incorrect. One opinion is valid, the other is complete bulldust.

Dara O'Briain captures the essence even better:

Now, Dara gives us the perfect introduction to the next asinine argument:

"Science doesn't know everything"

And to quote Dara's answer "Of course it doesn't, otherwise it would stop".For some reason, we seem to have a plague of scientific-illiteracy on social media. This is compounded by the illiterate reveling in their illiteracy as though it's something to be proud of.

There's nothing magical about science. In fact "science' isn't a thing, it's a discipline. Science is defined by any field of study governed by the scientific method. Broadly speaking, Science is simply a systematic way for carefully and thoroughly observing nature and using consistent logic to evaluate results.

Which part of that do you disagree with? Do you disagree with being thorough? Using careful observation? Being systematic? Or using consistent logic?

There are seven steps in the scientific method.

1 – Make an Observation

2 – Ask a Question

3 – Do Background Research

4 – Form a Hypothesis

5 – Conduct an Experiment

6 – Analyze Results and Draw a Conclusion

7 – Report Your Results

The scientific method mandates that all aspects of scientific research must be:

- testable

- reproduceable

- falsifiable

https://www.livescience.com/20896-science-scientific-method.html

The scientific method has been around for over 300 years. Adherence to the scientific method has provided us with every single technological advancement we recognise today and has doubled our life expectancy. If you reject the scientific method, you should forsake the computer you're using right now, your car, electricity, flying, your ready access to food and medicine.

There are some people who like to point to changes in scientific opinions over time as an example of science not working when exactly this is the opposite. Following the scientific method requires you to change your opinions when new data shows that opinion to be incorrect. Sure, science has been proven wrong, but it's always by better science and not because of some fanciful dogmatic idea that someone had. It was because the evidence lead in a different direction and scientists - as a body - moved in that direction.

"I'm a Skeptic"

Chances are - you aren't. Most likely, what you are is a denialist.I'll draw a distinction here between capital 'S' Skeptics and small 's' skeptics. I don't expect everyone (including myself) to be a Skeptic. A Skeptic does not accept anything (including personal testimony) without objective, corroborative empirical evidence.

My own definition of skeptic leaves a little wiggle room and allows for a liberal application of Occam's Razor (which I'll deal with later). As a skeptic, you need to have a clear dividing line between that which is evidence-based and that which is faith-based. Anything involving science or medicine - by definition - must be evidence-based. If you reject that, it's not science and it's not medicine.

A skeptic (or Skeptic) assumes a position to be false (see the Null Hypothesis) unless there is evidence to suggest otherwise. That evidence may be weak or strong. Further experimentation may strengthen or weaken that connection, however a skeptic will leave room for the hypothesis to be disproven by better, credible evidence. A skeptic will look at all the available evidence, evaluate that evidence critically and draw conclusions based upon the totality.

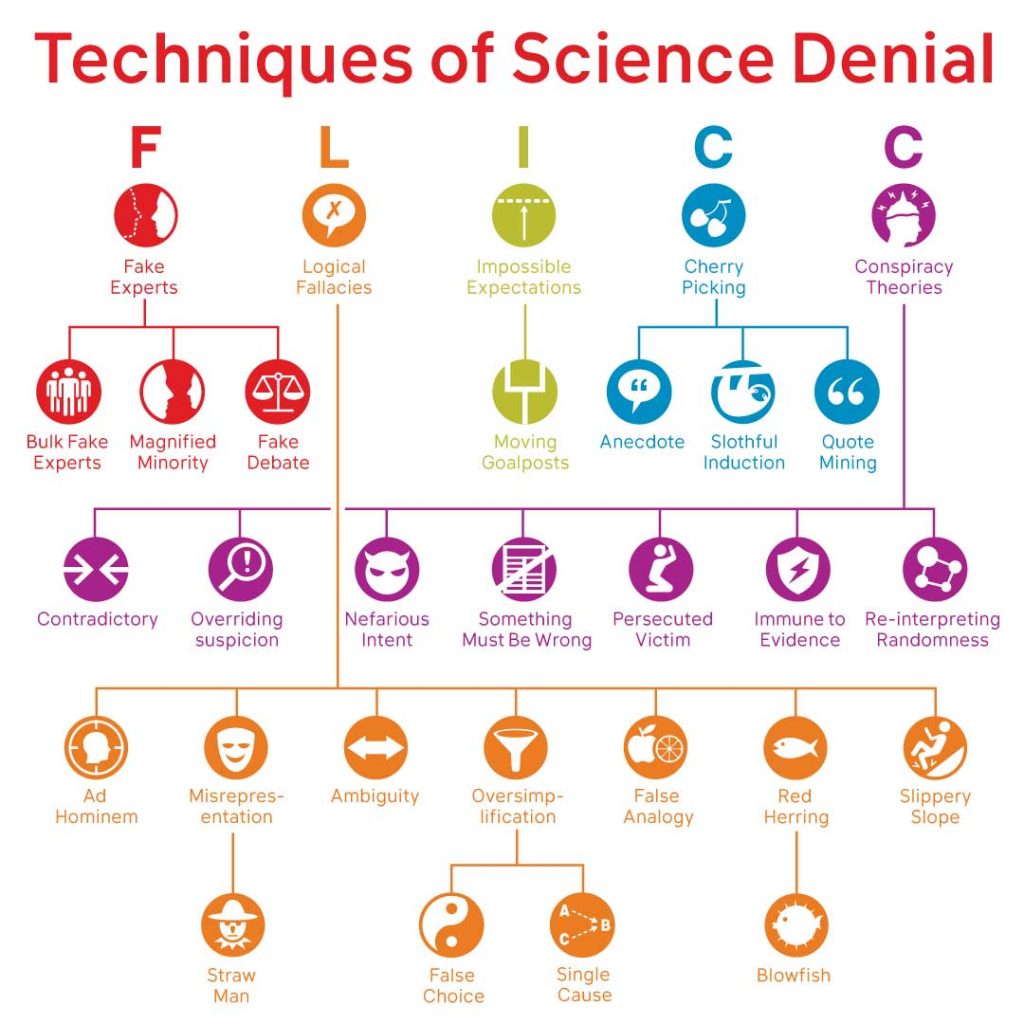

A denialist will start from a pre-conceived conclusion, cherry-pick the results that confirm that conclusion and ignore any evidence that disagrees with that conclusion.

Denialism is a process that employs some or all of five characteristic elements in a concerted way. The first is the identification of conspiracies. When the overwhelming body of scientific opinion believes that something is true, it is argued that this is not because those scientists have independently studied the evidence and reached the same conclusion. It is because they have engaged in a complex and secretive conspiracy. The peer review process is seen as a tool by which the conspirators suppress dissent, rather than as a means of weeding out papers and grant applications unsupported by evidence or lacking logical thought.

The second is the use of fake experts. These are individuals who purport to be experts in a particular area but whose views are entirely inconsistent with established knowledge. They have been used extensively by the tobacco industry since 1974, when a senior executive with R J Reynolds devised a system to score scientists working on tobacco in relation to the extent to which they were supportive of the industry's position.

The use of fake experts is often complemented by denigration of established experts and researchers, with accusations and innuendo that seek to discredit their work and cast doubt on their motivations.

The third characteristic is selectivity, drawing on isolated papers that challenge the dominant consensus or highlighting the flaws in the weakest papers among those that support it as a means of discrediting the entire field. An example of the former is the much-cited Lancet paper describing intestinal abnormalities in 12 children with autism, which merely suggested a possible link with immunization against measles, mumps and rubella. This has been used extensively by campaigners against immunization, even though 10 of the paper's 13 authors subsequently retracted the suggestion of an association. Fortunately, the work of the Cochrane Collaboration in promoting systematic reviews has made selective citation easier to detect.

Denialists are usually not deterred by the extreme isolation of their theories, but rather see it as the indication of their intellectual courage against the dominant orthodoxy and the accompanying political correctness, often comparing themselves to Galileo.

The fourth is the creation of impossible expectations of what research can deliver. For example, those denying the reality of climate change point to the absence of accurate temperature records from before the invention of the thermometer. Others use the intrinsic uncertainty of mathematical models to reject them entirely as a means of understanding a phenomenon.

The fifth is the use of misrepresentation and logical fallacies. Logical fallacies include the use of red herrings, or deliberate attempts to change the argument and straw men, where the opposing argument is misrepresented to make it easier to refute.

So essentially, if you are using any of these techniques - or subscribe to them, chances are you are a denialist - not a skeptic.

https://academic.oup.com/eurpub/article/19/1/2/463780

Occam's Razor

Occam's Razor is how I get my wiggle room for calling myself a skeptic and not a Skeptic and why I consider the former to be more honest than the latter. Unfortunately, there's no simple way to adequately explain this - only the long way....

The true definition of Occam's Razor is "Never increase beyond that which is necessary, the number of variables used to describe a system" which is too complex for some people, so it usually gets shortened to "The simplest answer is usually the true answer". Which may be a case of applying Occam's Razor to Occam's Razor.

The latter definition is frequently used by Skeptics to enforce an extension of the Null Hypothesis on arguments. To wit: Two explanations exist - the simplest answer wins. But this use is actually contrary to the spirit of the original definition.

The origins come from mathematics. Specifically the Binomial Theorem.

Say you have a sequence of numbers as follows:

1, 2, 3

And your asked what the sequence is. You could easily say it's obtained by added 1 to the previous number - mathematically f(x)=x. However, you don't know if that is the case. You only know that it applies for the observable numbers. It's only an hypothesis. If correct, the next number should be 4. By experiment, you test your hypothesis by finding the next number in the sequence and find the sequence now to be:

1, 2, 3, 5

And your asked what the sequence is. You could easily say it's obtained by added 1 to the previous number - mathematically f(x)=x. However, you don't know if that is the case. You only know that it applies for the observable numbers. It's only an hypothesis. If correct, the next number should be 4. By experiment, you test your hypothesis by finding the next number in the sequence and find the sequence now to be:

1, 2, 3, 5

Your hypothesis is now disproven. A new hypothesis might be that this is the Fibonacci sequence - where the new number is the sum of the preceding two numbers - or f(x) = f(x-2) + f(x-1). However, you still don't know. It's an untested hypothesis. You would need to test that postulate by finding more numbers, confirming the hypothesis and eventually arriving at a working theorem.

However, you still don't know that it is the correct mechanism. All you can say is that it works for the known observable cases. In fact, according to the binomial theorem, there are an infinite number of equations that satisfy any observable sequence of numbers. These are known as trivial solutions.

In other words: Give me a list of numbers, I'll give you an equation that works for that. In fact, I can provide you with any number of equations that work for it.

The trouble is: only one of them is actually correct. The rest have been forced to fit.

So the Binomial Theorem has a fail safe: A theorem is only proven if there are fewer variables than observable results. Which is the first part of Occam's Razor.

There is a numerical analysis method for deriving binomial variables from any sequence of numbers which is pretty kool, but I won't go into in too much detail here. It involves comparing the differences between each number and the differences between them and so on. With each iteration, you have one less number. If at any point, the numbers are all equal and the next difference line is zero, you have a potentially valid solution according to the Binomial Theorem. This is known as a non-trivial solution.

It actually may NOT be the correct solution. But it is the non-trivial solution that has the fewest number of variables required to satisfy the sequence for all observable numbers in the sequence.

What Occam's Razor says it that it doesn't matter if the solution is correct or not. The equation works for all observable states and is therefore valid. You don't need a more complicated equation that will only give you exactly the same results.

Newton's Laws of Motion have been around for several years now. They work. They work well. However, at the start of the 20th century it was found that at both ends of the spectrum, Newton didn't work so well. For very fast or very heavy objects, Einstein found Newton's Laws were breaking down. The result was Special Relativity which use v2/c2 as part of the calculations and voila! The equations explained the observations which were confirmed through experimental testing. Newton's Laws are actually a simplification of Special Relatively for the vast majority of cases where v<<c.

At the other end of the spectrum you have the microscopically small situations where there are no gradual adjustments - just 'leaps' from one microscopically small state to the next. This was discovered by the physicist Max Planck who coined the term Quantum Mechanics. It required a whole new set of equations to explain the observations. These equations were also verified by experimentation. Once again, Newton's Laws were found to be a simplification of Quantum Mechanics.

As a side note: Quantum Mechanics is weird. If fact, the weirder it is, the more correct it seems to be. See Quantum Entanglement and spooky action at a distance.

So, when things are very, very small we use Quantum Mechanics. When they are very, very fast or very, very large we use Relativity. The majority of times we use Newton's Laws because they are much simpler and adequately describe all the systems we usually encounter.

Is Newton wrong? No, it's a simplification of a more complex system. So we apply Occam's Razor to it.

In our trivial case, if we only observed the three number sequence and never encountered anything outside that, we could be justified in using f(x)=x simply because it works for all systems we encounter. This is despite the fact that it is actually wrong.

Which brings us back to Quantum Mechanics and Relativity. Although Newton's Laws can be reconciled to both as a simplification of each, they are actually incompatible with each other.

In other words, all of them are actually wrong.

There is no unified set of equations that can explain all systems. The search for such a set of equations is the Holy Grail of physics and is often referred to as The Grand Unified Theory explaining the interactions for the four known forces. There are many proposed models - all of them unbelievably complex, none of which have been demonstrated by experimentation. In fact, all physicists have so far been able to do is disprove models, although some progress has been made in identifying other key particles.

So, do we stop using Newton? No, because it works. And in the absence of a GUT, we simply continue on with the expectation that a GUT will someday be discovered (or not) that will explain why Newton, Quantum Mechanics and Relativity all work for their particular observable situations.

Physics is used to this. In fact, light can be shown to act as both a wave and a particle. But it actually can't be both - not by our current understanding of physics. So, we accept that it does behave as both and by the Heisenberg's uncertainty principle we accept that we either treat it as one or the other, but not both at the same time.

Thus we hold two independent truths together whilst acknowledging they are contradictory according to our limited understanding of their true nature. Quantum Mechanics does not disprove Relativity despite being mutually exclusive and vice versa despite Einstein's best efforts to do so. They are both true independently within their own spheres of evidence.

Now, applying this to small 's' skepticism: What we don't know will always exceed what we do know. It is quite possible - and indeed scientific - to hold two seemingly contradictory opinions in completely different spheres of thought or study, each with their own well established and independent methods of investigation and analysis as being true and reliable.

Now, it's not my intention to drawn a long bow here. I'm not stating something that's overreaching. I'm simply stating that just because there seems to be a inherent contradiction between two otherwise unrelated things, if does not necessarily mean one is right and the other is wrong. They both could simply be oversimplifications of a much larger and more complex system. In fact, the more we understand the Universe, the more often these situations arise.

Attempting to apply simple explanations to complex systems will more likely be incorrect than correct, particularly with the narrow set of data you are likely to be using. So don't be surprised if someone in another field of study, looking at completely different data, arrives at a conclusion that is different to yours.

Now, I've deliberately made this explanation as broad as possible. That way it's less likely to offend anyone's confirmation bias. But at least if I bring up Occam's Razor - you'll know what I mean.

Other objections

Most other objections (such as the Galileo Gambit) can be dealt with as simple logical fallacies. I suggest a familiarity with these.A long-term project of mine is to build a website where all arguments must be graphically represented by a mind map. There's software that does this, but it's difficult to do in realtime and in a medium accessible to all. I used to use argunet for this purpose, but they are closing up shop, so I'll need a mechanism of my own. So, watch this space...

This genuinely is well written, Wayne, and is either the product of dedicated interest, chronic insomnia, Covid-19 based isolation, or a combination of all three. Happily, I only found one spelling mistake, "Follwoing" but I;m sure there are more. Seriously though, an enjoyable read for a self confessed science idiot. There is such a dearth of critical thinking in our current politically polarised situation. This is (almost) a breath of fresh air. The only thing missing is a Youtube link to a dancing cat.

ReplyDeleteLOL! Well, there's a lot of plagiarism in there as well. I've tried to source as much as I can without it reading like a pseudo-academic article. Readability and accuracy was my goal. I don't pretend to be an expert. The problem I find is that most experts try to communicate only with other experts. There are some excellent blogs by experts, but I find when I link to them, nobody reads them.

DeleteI have had so many of exactly the same type of argument on social media that it feels easier to write the arguments down in one easily accessible location.